Artificial intelligence doesn’t emerge out of nowhere. It requires a huge amount of speech data to develop, and that’s only after the data itself has been effectively analyzed and annotated.

For your speech recognition AI project to reach its full potential, it must pass through a series of machine learning processes. One of those stages is speech data annotation. It’s a step you simply can’t ignore to make your AI solution as smart and inclusive as possible.

We like to think of speech data annotation for machine learning and AI development as being accomplished by a series of levers. They represent specific tasks that can be accomplished by certain people and require different degrees of detail.

We help you determine what works for each specific use case and help get you the best return on investment.

It’s all about figuring out which levers are effective for your specific needs.

In this blog we will cover the basics of speech data annotation, including what it is and why we do it. We will also look at the different levers we can pull and our process for getting it all done according to your purposes and price points.

What is speech data annotation?

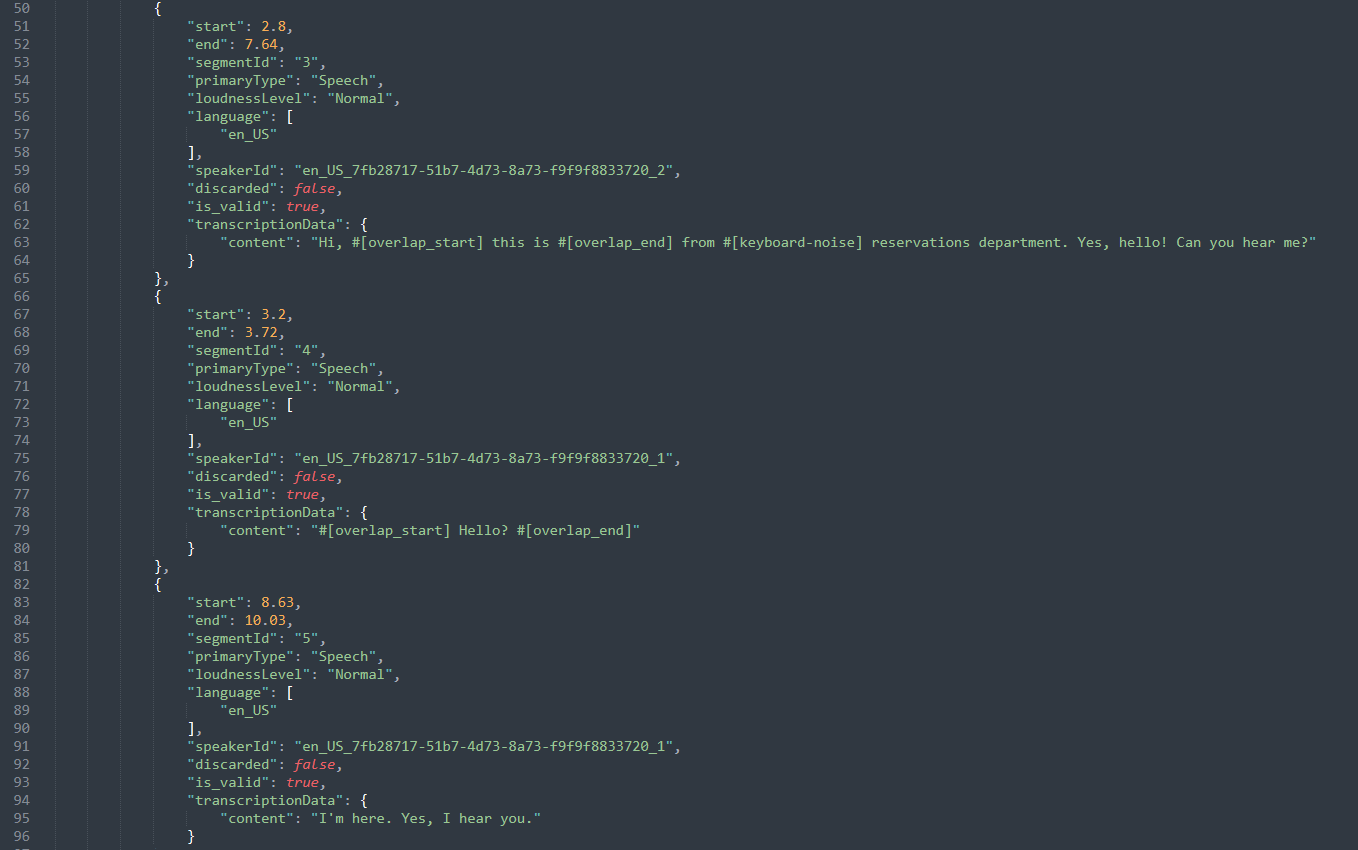

Speech data annotation is the human-guided categorization and labeling of raw audio data to make it more usable for machine learning or AI applications. Raw audio files are paired with text files containing timestamps to note key audio events.

You can annotate the verbal components of speech data (i.e. words) and the non-verbal aspects as well.

For example, imagine you’re annotating phone conversation data. Within the context of a specific conversation there can be multiple speakers, someone coughing and sneezing, a doorbell ringing, and a barking dog.

Data annotation levers for all the above can be pushed or pulled, and we can step in and advise if you’re not sure exactly what you need for your specific project.

Katja Krohn,

Katja Krohn,

Data Solutions Project Manager

We can't start with the same thing that we're supposed to train.

How Human Speech Annotation Advances AI

As we covered in our post about speech transcription, human annotation is used to cover new capabilities that automatic speech recognition can’t yet cover.

The more annotated data you use to train the model, the smarter it becomes and the closer we come to universal usability.

Speech data annotation also help increase inclusivity by processing all possible demographic combinations:

- Gender

- Age

- Languages

- Dialects

- Accents

- Non-native speakers

For example, cars need an enormous amount of speech data to reach full autonomy. To cover all possible speech use cases, we need to collect recordings of all possible commands in as many languages, dialects, and accents as possible that could be used to communicate inside the vehicle.

Then, you add the wrinkle of ambient traffic noise. The car’s speech recognition system must learn to ignore the sounds of honking cars, police sirens, and other traffic noise.

Annotators make sense of the mountain of speech data required to make the technology work. Let’s now take a closer look at what’s involved.

Different Types of Speech Data Annotation

Annotation is an umbrella term for several specific tasks performed by our data experts. Here’s a brief introduction to each.

Transcription

Transcription is the adaptation of audio to text by typing out what was said and by whom. Human speech transcription is necessary for use cases where we are trying to improve speech recognition accuracy.

For example, we transcribed speech data for Sonos to help them invent multi-room wireless home audio. They required wake word data, like Amazon’s “Alexa” and Google’s “OK Google.”

Our team went through each phrasing segment and tagged the relevant wake words. With those timestamps, the audio was cropped after the desired phrase.

This wake word speech data was used to test and tune the wake word recognition engine, ensuring that users of all demographics or dialects have an equally great voice experience on Sonos devices.

Sound and Noise Labeling

Audio annotators label and categorize clips based on linguistic qualities and non-verbal sounds. This allows for improved natural language processing (NLP) in speech recognition, chatbots, text to speech, and voice search.

To train in-car systems to communicate with humans, Nuance required not only all possible terms, accents, phrases that would be used to communicate in the vehicle, but also the labeling of in-car noises.

Field data collection is key here because you need occurrences of car horns and traffic or construction noises that will take place when a real person is trying to use the technology.

Sentiment Analysis

Sentiment analysis is the process of identifying and categorizing emotions or opinions expressed in speech or text data.

This can be helpful if you have to parse through a large amount of data, like hundreds customer reviews of your website, and you would like to understand the general attitude of your customer base.

Medallia, for example, offers a platform that helps their clients gather and analyze feedback from customers and employees.

Customers give feedback on the phone and that data is converted into valuable insights in real-time.

Sentiment analysts determine whether the person’s attitude towards a particular topic, product, or service is positive, negative, or neutral.

This allows companies to target pain points among the customer base and make smarter, more informed daily decisions to build better customer relationships and improve company culture.

Intent Analysis

Here, annotators analyze the need or desire being expressed in speech data, classifying it into several categories.

Let’s say we come across a phone conversation where the following is said: “I’ve been saving like crazy for Black Friday. iPhone X here I come!”

There are no words like ‘buy’ or ‘purchase’ directly stated, but the clear intention here is to purchase an iPhone.

An intent analysis tool would tag the second sentence as follows:

- Intention = “buy”

- Intended object = “iPhone”

- Intendee = “I”

Examples of intent analysis categories include request, command, or confirmation.

Named Entity

Entity annotation teaches NLP models how to identify the following:

- Parts of speech – adjectives, nouns, adverbs, verbs

- Proper names – people, places

- Key phrases – target keywords

They first create entity categories, like Name, Location, Event, Organization, etc., and feed the named entity recognition model with the relevant training data.

For example, we can identify three types of entities in the sentence “Jeff Bezos is the founder of Amazon, a company from the United States”:

- Person: Jeff Bezos

- Company: Amazon

- Location: United States

By tagging some word and phrase samples with their corresponding entities, they teach the NER model how to detect entities itself.

Outlining the Speech Data Annotation Process

Finding the right levers to push or pull for your specific project will get the right data in your hands and keep you from unnecessary spending.

Step 1: Determine Your Needs

We begin by asking some exploratory question, such as:

- What is the precise scope of the project?

- How much data do you need?

- What exactly needs to be annotated?

- What is your budget?

Guidelines must be set for annotating exactly what is needed from the time we receive the data through quality assurance.

Let’s say you’re developing an automated drive thru experience for a fast-food restaurant. Customers pull up and make their orders, and the AI processes the speech data in a way that ensures the accurate delivery.

Here’s you might require the transcription of the speech data (what’s being ordered and by whom), the labeling of background noises common to the context (vehicle noises, other people in the vehicle, traffic, sirens etc.), as well as a mix of intent and named entity analysis.

Each new layer makes the project more complex and requires a bigger budget, detailed guidelines, and increased levels of QA.

Step 2: Workforce Management

So, who exactly does the annotation work?

It’s a combination of gig-economy freelancers, third-party transcription vendors, crowd workers, and our in-house experts, depending on the needs of your project.

If you need high-volume, reliable transcription that doesn’t require native-language experts we go with a vendor.

If the task is simple enough to be broken into small pieces that can be annotated with no previous experience – wake words or simple voice commands, for example – we go with crowdsourcing.

When industry knowledge is key, we partner with market research groups. A task like medical transcription, for example, requires precision and confidentiality in each transcription, so it’s critical to find experts in that field. Sentiment analysis can also require some degree of expertise so the customer feedback can be put in proper context.

Finally, if lower costs are more important, we make use of cutting-edge speech recognition tools combined with crowdsourced reviews.

The training process is based on developing a clear understanding of guidelines developed in the use case definition process. Everyone is pre-screened and tested based on your needs.

Step 3: Quality Assurance

The level of QA is impacted by the complexity of your project.

It’s a constant, collaborative review process based on your specific needs.

You might want two transcriptionists who compare their work and collaborate to make sure they’re on the same page.

With Sonos, for example, our QA team did a thorough review of the processed data to ensure it met their strict requirements. We also worked dynamically on a live data collection platform so that Sonos could access the data immediately as it came in.

We work with the client to find that balance between cost and effect after determining whether they want to QA.

Rick Lin,

Rick Lin,

Solutions Architect Manager

We work with the client to find that balance between cost and outcome. We can provide really good data and we can do the QA, but that comes at a cost.

Many speech data annotation companies will take your base requirements and run with them. They have one, low-cost way of doing annotation and fit you into their one-size-fits-all processes with little to no customizability.

Others take an ‘always say yes’ approach. They don’t intuit your needs or foresee your challenges and rack up your costs in the meantime.

We look at what’s behind your request, play a consulting role, catch things that haven’t been thought of as a fresh pair of eyes, and provide a single point of contact so you’re free to focus on bigger picture items with respect to your project.

Get Impeccably Labeled Data

The speech data annotation process begins with your needs as the client.

Summa Linguae Technologies is a trusted partner to many of the world’s most prominent emerging technology companies.

We enter each project with a high level of transcription, labeling, and multilingual media monitoring expertise and experience. Combined with forethought and planning, the end result is the delivery of high-quality annotation you can use.

We’ve developed custom tools and processes that give us the flexibility to collect data to meet your exact requirements.

Contact Summa Linguae now to learn how we can create a full end-to-end data collection and annotation solution for your business.