Can machine learning and AI solutions stop the spread of fake news?

Fake news is nothing new. It has been around since the dawn of media. But people now distribute it at an extraordinary scale with the aid of artificial intelligence (AI).

“Vaccines cause autism. The Earth is flat. The 5G network spreads the coronavirus”. Conspiracy theories like these have been around for some time and there’s no indication they will disappear anytime soon.

In fact, we have never seen misinformation spread at such a rapid rate—especially when it comes to everyday news.

Unfortunately, because of its processing capacity, AI is a key factor in the fabrication and spread of fake news.

However, there is a light at the end of the tunnel. Anti-fake-news algorithms have emerged to help combat the spread of false information.

AI and Fake News: Algorithms Write Their Own Text

The GPT-2 text generator allows you to create fake messages on any subject. OpenAI initially delayed publication of the source code for ethical reasons, but eventually it was made public.

For example, here’s a sample text based on the input topic, “the Earth is flat”:

“Earth is certainly not spherical, but that’s a small point. As for the Earth spinning like a top, that is what astronomers of old believed, too, but it’s wrong in a few fundamental ways.

According to recent research by Jeff Cramer at the Air Force Research Lab in Montgomery, Ala., the tilt of our planet’s axis is actually right around the 25.6-degree mark, a half degree under NASA’s current definition.

So a few more days of looking up the sky and you can see the sky map error, and try to come up with an idea of what the real Earth looks like, not just a featureless cloudscape.”

While the text isn’t perfect, you may not detect the AI generation with a quick scan.

For now, the generator only works in English. However, its publicly accessible source code means the algorithm will adapt to fabricate fake messages in different languages.

It’s all similar to recent advances in text-to-image AI.

Text fake news, however, is only the tip of the iceberg. So-called “deepfakes” are much more advanced, and unlikely to be detected by the average person:

Why do social media platforms struggle with fake news?

Fake news flourishes when users share them on a massive scale. In fact, people are often unaware that they are distributing false information on Facebook or Twitter.

Even traditional media and recognized publications also have issues when it comes to fact checking. This is a much deeper problem, though, partly related to the demand for rapid content creation.

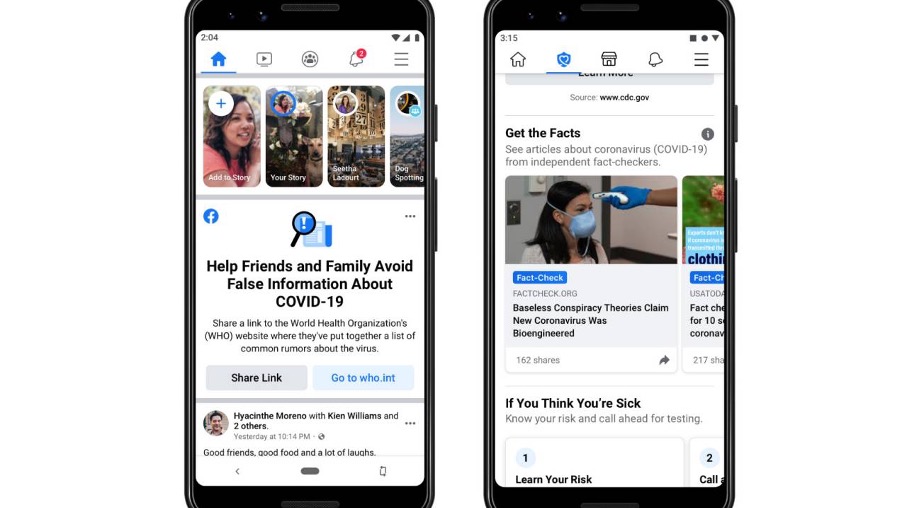

Both Facebook and Google have plans for introducing mechanisms that detect and flag false information.

At present, however, human content verifiers take care of fake news detection. These content verifiers manually check reports and facts, and that’s very time consuming.

Over time, we can expect algorithms to do most of the manual work.

Is the internet too big to be verified?

AI is just beginning to fight fake news, misinformation, and untrustworthy websites.

An increasing number of platforms and NGOs monitor the authenticity of information on the Internet.

However, this response is still only a small drop in the ocean.

That’s because the potential to create false content is unlimited and supported by algorithms that can “produce” subsequent false information on a daily basis with the goal of discrediting a given social group, causing anxiety, or creating financial or political benefits for their creators.

There is so much new content on the web that no algorithm, not to mention human, is able to “read” and verify it regularly.

Verification methods of detecting artificial text, video, or even audio content are in development.

But at present, these efforts have yet to be implemented by major media companies at a large scale.

The hope is that we will soon see the creation of user-friendly tools, like web browser plug-ins, that would mark unreliable sources or flag false information.

Can the translation industry play a role in detecting fake news?

The translation industry is already deeply embedded in machine learning’s role in generating and detecting natural language.

For example, machine learning applies to localization efforts like website translation, generating multilingual product descriptions, and providing customer service support via chatbots.

Therefore, it’s not a stretch to imagine that translation companies could play a role in developing software and algorithms that not only efficiently verify the accuracy of a translation, but also verify the truth of the claims present in that content.